导语

人工智能(Artificial Intelligence, AI)作为新一轮科技革命和产业变革的重要驱动力量,正逐步向人类社会各个领域渗透,对经济发展、社会进步、国际政治格局等诸多方面产生重大而深远的影响。人工智能技术的快速发展和广泛应用,推动了经济社会向智能化的加速跃升,为人类生产生活带来了诸多便利。然而,在人工智能应用广度和深度不断拓展的过程中,也不断暴露出一些风险隐患(如隐私泄露、偏见歧

人工智能伦理是探讨AI带来的伦理问题及风险、研究解决AI伦理问题、促进AI向善、引领人工智能健康发展的一个多学科研究领域。人工智能伦理领域所涉及的内容非常丰富,是一个哲学、计算机科学、法律、经济等学科交汇碰撞的领域。人工智能伦理领域所涉及的内容和概念非常广泛,且很多问题和议题被广泛讨论但尚未达成共识,解决AI伦理问题的手段方法大多还处于探索性研究阶段。可见,AI伦理这个领域内涵丰富、议题广泛,未来将迎来百花齐放的研究态势。

本文简要介绍南方科技大学计算机科学与工程系姚新教授团队最近在国际期刊IEEE Transactions on Artificial Intelligence上发表的题为“An Overview of Artificial Intelligence Ethics”论文内容,给读者提供一个人工智能伦理领域的概览,以便有兴趣的读者后续在AI伦理方面展开探索研究。

【论文信息】:Changwu Huang, Zeqi Zhang, Bifei Mao and Xin Yao, “An Overview of Artificial Intelligence Ethics,” in IEEE Transactions on Artificial Intelligence (Early Access), 2022, doi: 10.1109/TAI.2022.3194503.

【原文链接】:https://ieeexplore.ieee.org/abstract/document/9844014。

【说明】:以下内容源自论文”An Overview of Artificial Intelligence Ethics”。为了缩短篇幅,在翻译过程中做了大量删减,只保留了原论文的主要核心内容,省略了许多具体描述,有努力持续更新AI伦理相关的信息和动态。

摘要

人工智能已经深刻地改变了我们的生活,而且这种改变还将继续。AI技术已经被应用于自动驾驶、医疗、媒体、金融、机器人、互联网服务等诸多领域和场景。人工智能的广泛应用及其与社会经济的深度融合提高了社会生产效率和经济效益。然而,与此同时,AI也对现有社会秩序造成了冲击,并引发了伦理关切。人工智能带来的隐私泄露、歧视、失业、安全风险等伦理问题给人们带来了极大的困扰。的方法、汇总现有评价人工智能伦理的方法,对该领域进行全面概述。此外,还指出了在人工智能中实践伦理所存在的挑战以及一些未来的前景。我们希望能够为该领域的研究人员和从业者,特别是该研究学科的初学者提供一个系统而全面的人工智能伦理概述,从而促进他们进一步的研究和探索。

1. 引言

人工智能[1]在过去十年中取得了快速而显著的发展。机器学习、自然语言处理和计算机视觉等人工智能技术逐渐向各个学科和社会各个方面渗透。人工智能正在越来越多地接管人类任务并取代人类决策。AI已广泛地应用于商业、物流、制造、交通、医疗、教育、国家治理等各个领域。

人工智能的应用带来了效率的提高和成本的降低,有利于经济增长、社会发展和人类福祉[2]。例如,人工智能聊天机器人可以随时响应客户的询问,这将提高客户的满意度和公司的销售额[3]。人工智能允许医生通过远程医疗服务为偏远地区的患者提供服务[4]。毫无疑问,人工智能的快速发展和广泛应用已经深刻地影响着我们的日常生活和人类社会。

然而,与此同时,人工智能带来了许多重大的伦理(道德)风险或问题。在过去的几年中,已经观察到许多人工智能产生不良结果的案例。例如,在2016年,一辆特斯拉汽车由于自动驾驶系统未能识别迎面而来的卡车而发生相撞事故,造成司机在交通事故中丧生[5]。微软的AI聊天机器人Tay.ai被迫下架[6],因为在她加入Twitter不到一天后就变成了种族主义和性别歧视者。还有许多其他例子涉及人工智能系统的公平、偏见、隐私和其他伦理问题[7]。更严重的是,人工智能技术已经开始被犯罪分子用来伤害他人或社会。例如,犯罪分子使用基于AI的软件冒充某公司首席执行官的声音,并要求欺诈性转移243,000美元[8]。因此,如何应对和解决人工智能带来的伦理问题和风险,使人工智能在伦理规范的导向下发展和应用是非常紧迫和重要的。

人工智能伦理(有些文献中也称作机器伦理[9])是一个研究人工智能伦理问题[10]的新兴的跨学科领域。人工智能伦理所涉及的内容比较广泛。根据文献[11],人工智能伦理所研究的内容可以大体分为两个方面:人工智能伦理学(Ethics of AI)和伦理人工智能(Ethical AI)。其中,人工智能伦理学主要研究与人工智能相关的伦理理论、指导方针、政策、原则、规则和法规。伦理人工智能主要研究如何遵循伦理规范来设计和实现行为合乎伦理的人工智能[11]。人工智能伦理学是构建伦理人工智能(或使人工智能按伦理规范行事)的先决条件,它涉及伦理或道德价值观和原则,这些价值观和原则决定了伦理道德上的对与错。有了适当的人工智能伦理价值观和原则,才可以通过一些方法和技术来设计或实践伦理人工智能。

中发表了一些综述论文,但现有的综述论文都侧重于人工智能伦理的某个方面,仍然缺乏一个全面的综述来提供该领域的全貌。因此,本文致力于从多个方面对人工智能伦理领域进行系统、全面的概述,从而为未来研究和实践人工智能伦理提供信息指导。我们希望它能为科学家、研究人员、工程师、从业人员和其他相关利益相关者提供信息,并为感兴趣的人,特别是该研究领域的初学者提供足够的背景、全面的领域知识和鸟瞰图,以便他们进行进一步的研究和实践。

2. 论文的主要内容

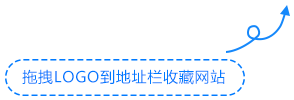

本文主要涉及如下四个方面的内容:

1)人工智能伦理问题和风险。第3节总结了现有人工智能伦理问题和风险,并提出了人工智能伦理问题的新分类。提出的新分类有助于识别、理解和分析人工智能中的伦理问题,然后开发解决这些问题的解决方案。此外,还讨论了与AI系统生命周期不同阶段相关的伦理问题。

2)人工智能伦理指南和原则。第4节调研了全球范围内公司、组织和政府发布的146个人工智能伦理指南文件,介绍了最新的全球人工智能伦理方针和原则。这些指导方针和原则为人工智能的规划、开发、生产和使用以及解决人工智能伦理问题的方向提供了指导。

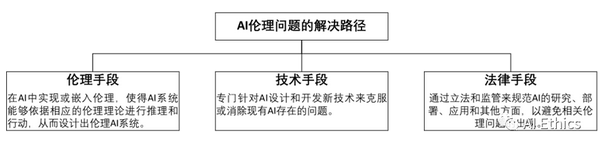

3)人工智能伦理问题的解决路径。第5节回顾了解决人工智能伦理问题的多学科方法,包括伦理、技术和法律方法。这不仅对伦理人工智能的实践进行了总结,而且还从各种角度提出了针对AI伦理问题潜在的不同解决方案,而不是仅仅依赖于技术方法。

4)人工智能伦理的评估方法。第6节中总结了测试或评估人工智能系统是否符合伦理要求的方法。

论文所涵盖的主要内容以及它们之间的联系如图1所示。

图1 人工智能伦理主要涉及的内容.

3. 人工智能伦理问题和风险

要解决人工智能的伦理问题,首先需要认识和理解人工智能所带来的伦理问题和风险。人工智能的伦理问题通常是指与人工智能相关的伦理(或道德)上的坏事或有问题的结果(即人工智能的开发、部署和使用所引发的这些与伦理道德相关的问题和风险)。现有的应用和研究中已经发现了许多伦理问题,例如缺乏透明度、隐私和问责制、偏见和歧视、安全和安保问题、犯罪和恶意使用等等。

3.1人工智能伦理问题的分类总结

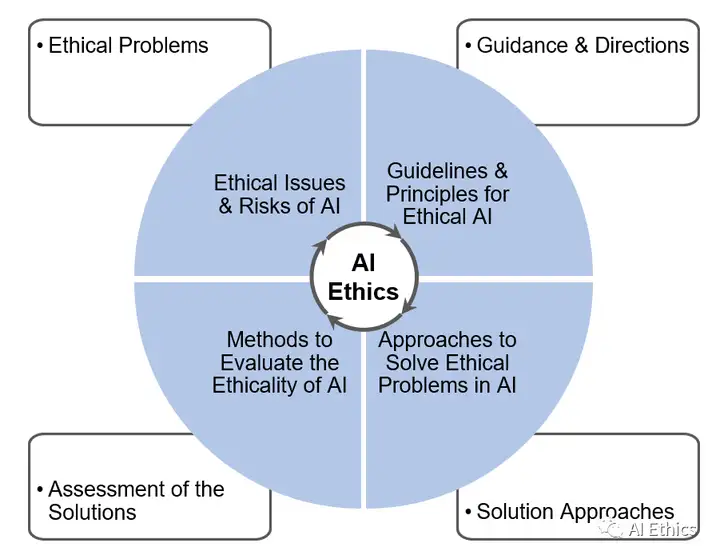

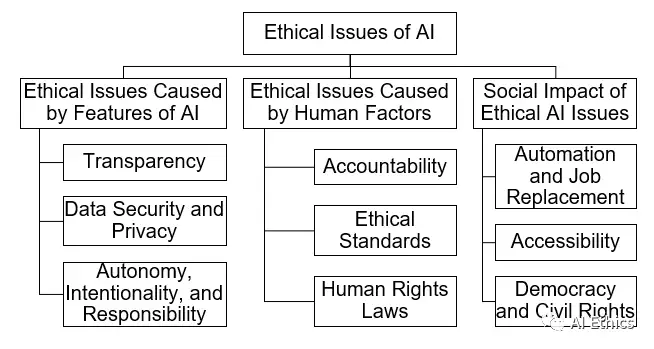

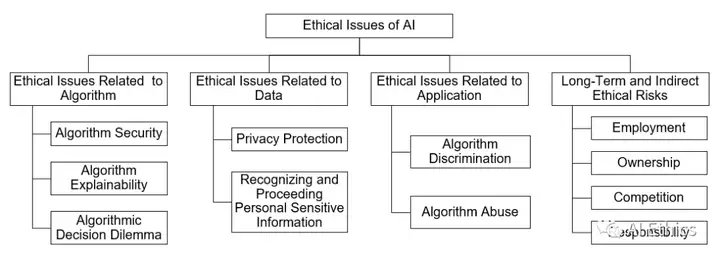

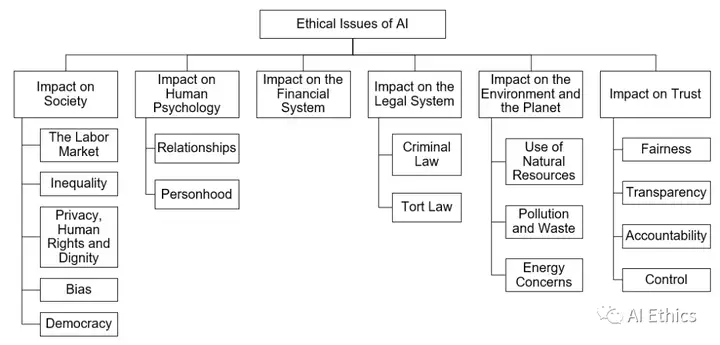

首先,我们对现有四篇文献中人工智能伦理问题的分类进行介绍,从不同的角度描述了人工智能的伦理问题或问题。其中两个来自政府报告,另外两个来自学术出版物。图2-5分别展示了四篇文献中对AI伦理问题的分类。

图2 基于AI特征、人为因素和社会影响的分类(文献[11]中对AI伦理问题的分类).

图3 基于AI和人类脆弱性的分类(文献[29]中对AI伦理问题的分类).

图4 基于算法、数据、应用以及长期和间接风险的分类(文献[38]中对AI伦理问题的分类).

图5 基于AI部署应用的分类(文献[51]中对AI伦理问题的分类).

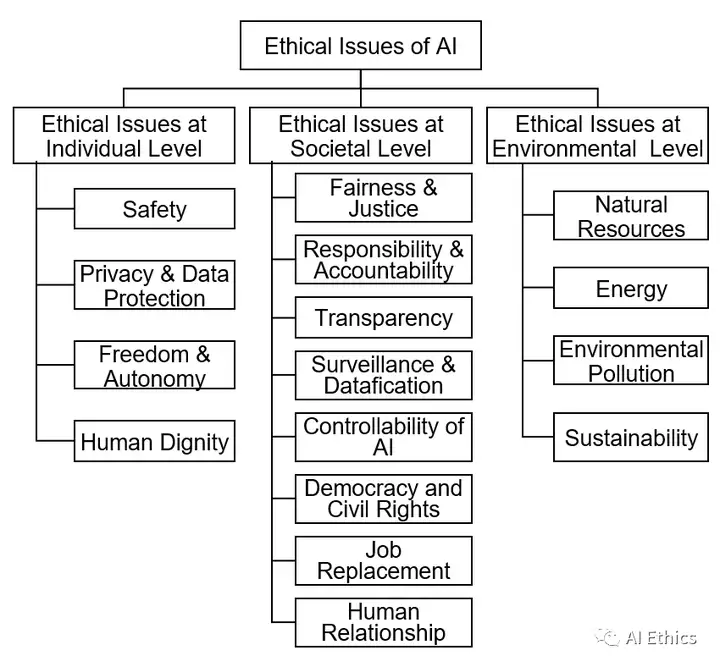

3.2我们所提分类:人工智能在个人、社会和环境层面的伦理问题

在上一小节中,我们回顾了文献中对人工智能伦理问题的分类。然而,上述分类存在一些不足。具体而言,基于AI特征、人为因素和社会影响的分类[11]显然忽略了AI对环境的影响,如自然资源消耗和环境污染。基于AI和人类脆弱性的分类[29]忽略了几个重要问题,如责任、安全和环境问题。基于算法、数据、应用以及长期和间接伦理风险的分类[38]忽略了公平、自主和自由、人的尊严、环境问题等方面的考虑。尽管基于AI部署应用的分类[51]比较全面地涵盖了伦理问题,但这种分类过于繁琐,且有些问题(包括责任、安全和可持续性等)也被忽略了。这促使我们进一步分析和梳理人工智能伦理问题,并进行分类。

毫无疑问,人工智能系统主要服务于个人或社会公众。因此,我们可以从个人和社会的角度分析和讨论人工智能伦理问题。同时,作为地球上的实体,人工智能产品不可避免地会对环境产生影响。因此,还需要考虑环境方面相关的伦理问题。所以,在本小节中,我们提出将人工智能伦理问题分为三个不同层次,即个人、社会和环境层面的伦理问题。个人层面的伦理问题主要包括AI对个人及其权利和福祉产生不良后果或影响的问题[69]。社会层面的人工智能伦理问题考虑了人工智能为群体或整个社会带来或可能带来的不良后果[69]。环境层面的人工智能伦理

图6 我们所提出的人工智能伦理问题的分类.

1)个人层面的AI伦理问题

在个人层面,人工智能对个人的安全、隐私、自主和人格尊严产生了影响。人工智能应用给个人安全带来了一些风险。例如,涉及自动驾驶汽车和机器人的人身伤害事故在过去几年中已经发生和被报道。隐私问题是人工智能给我们带来的严重风险之一。为了获得良好的性能,人工智能系统通常需要大量数据,其中通常包括用户的私人数据。但是,这种数据收集存在严重的风险。主要问题之一是隐私和数据保护。此外,如上一小节所述,人工智能的应用可能会给人权带来挑战,例如自主权和尊严。自主性是指独立、自由且不受他人影响的思考、决定和行动的能力[70]。当基于人工智能的决策在我们的日常生活中被广泛采用时,就存在限制我们自主权的巨大危险。作为主要人权之一的人的尊严是关于一个人受到尊重和以合乎道德的方式对待的权利[71]。在人工智能的背景下,保护尊严至关重要。人的尊严应该是保护人类免受伤害的基本概念之一,在开发人工智能技术时应该受到尊重。例如,致命的自主武器系统[72]可能违反人类尊严原则。

2)社会层面的AI伦理问题

智能的可控性、民主与公民权利、工作替代与人际关系。

人工智能存在偏见和歧视,对公平正义提出了挑战。人工智能中嵌入的偏见和歧视可能会增加社会差距并对某些社会群体造成伤害[70]。例如,在美国刑事司法系统中,用于评估犯罪风险的人工智能算法已被注意到表现出种族偏见[73]。责任意味着对某事负责。为参与者分配责任对于塑造算法决策的治理非常重要。基于这一概念,问责制是对损害负有法律或政治责任的人必须提供某种形式的正当理由或补偿的原则,并通过提供法律补救措施的责任体现出来[70]。因此,应建立机制以确保人工智能系统及其决策后果的责任和问责制。由于人工智能算法的黑盒性质,缺乏透明度已成为广泛讨论的问题之一。透明度,即对人工智能系统如何工作的理解,对于问责制也至关重要。监控和数据化[74]是我们生活在数字化和智能化时代的所面临的问题之一。数据是通过智能设备从用户的日常生活中收集的,这导致我们生活在大规模监控中。随着人工智能的力量迅速增强,人工智能系统的发展必须要保障和确保人工智能系统的人类可控性。其他问题,包括民主和公民权利、工作替代和人际关系,也属于社会层面的问题。

3)环境层面的AI伦理问题

环境层面的器、存储设备等,这些硬件的生产消耗了大量的自然资源,尤其是一些稀有的元素。此外,在这些硬件的生命周期结束时,它们通常会被丢弃,这可能会造成严重的环境污染。另一个重要的方面是人工智能系统通常需要相当大的算力,这伴随着高能耗。此外,从长远和全球的角度来看,人工智能的发展应该是可持续的,即人工智能技术必须满足人类发展目标,同时保持自然系统提供经济和社会所依赖的自然资源和生态系统服务的能力[2]。综上所述

我们提出的分类从三个主要层面阐明了AI伦理问题,即人工智能对个人、社会和环境的影响。无论人工智能应用在哪个领域,我们都可以从这三个层面考虑相应的伦理问题。显然,这种分类方法简单明了,全面涵盖了人工智能所涉及的伦理问题。

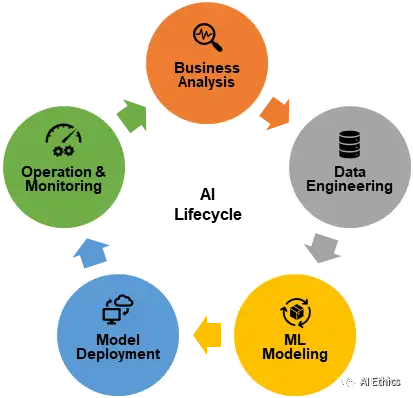

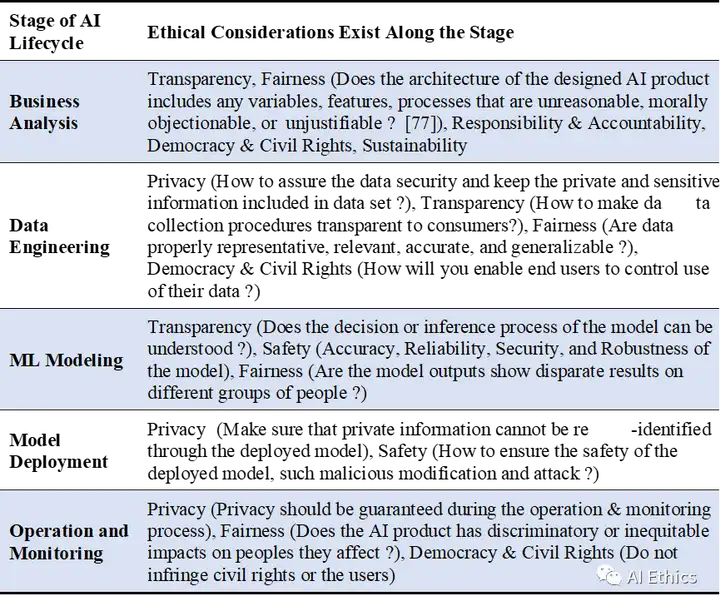

3.3与人工智能系统生命周期的每个阶段相关的关键伦理问题

在回顾了文献中讨论的伦理问题和风险之后,我们接下来根据人工智能系统生命周期的不同阶段来讨论相关的伦理问题。如果我们知道现有的伦理问题容易由人工智能系统生命周期的哪个阶段或步骤引发,这对我们消除这些问题将大有裨益。这是讨论人工智能系统生命周期各个阶段潜在伦理问题的动机。

基于机器学习的AI系统的一般生命周期或开发过程通常涉及以下阶段[75,76]:业务分析、数据工程、机器学习建模、模型部署以及运作和监控,如图7所示。

图7 人工智能系统的一般生命周期[75,76].

我们试图建立一个将伦理问题与人工智能生命周期各阶段联系起来映射,这种联系意味着伦理问题更有可能发生在人工智能生命周期的某个阶段,或者往往是由这一阶段的某种原因引起的。这种映射如下表1所示,表中对几个重要的伦理问题与人工智能生命周期的五个步骤进行了关联。这种映射将有助于在人工智能系统的设计过程中以积极主动的方式考虑和解决伦理问题。

表1 人工智能生命周期每个阶段的伦理考虑.

4. 人工智能伦理指南和原则

方针和原则为实践AI伦理提供了有价值的指导。本节致力于提供最新的全球人工智能伦理指南和原则。我们调查了自2015年以来全球各国公司、组织和政府发布的与人工智能伦理相关的146份文件。这些指导方针和原则为人工智能的规划、开发、生产和使用以及解决人工智能伦理问题的方向提供了指导方针。

4.1人工智能伦理相关指南文件

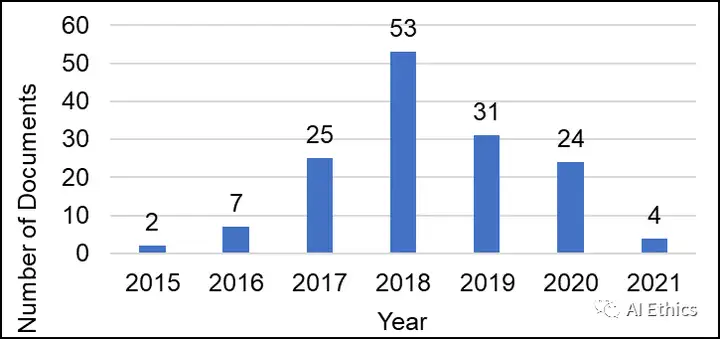

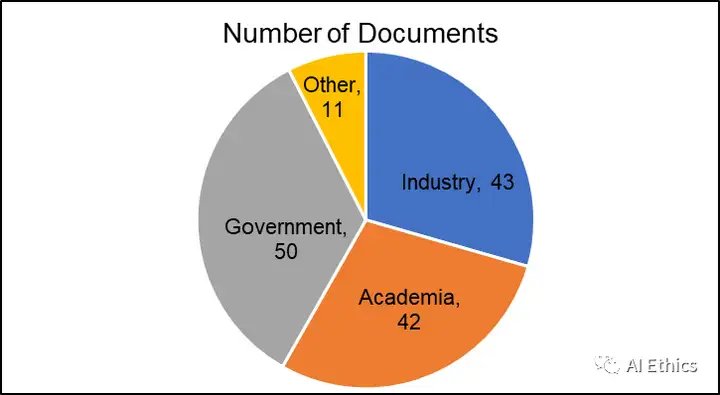

Jobin 等人[12]在2019年对当前的AI伦理原则和指南进行了调查和分析。他对来自不同国家或国际组织发布的84个AI伦理文件进行了分析。Jobin等人[12]分析发现目前所发表的AI伦理指南在五个关键原则上达成了广泛共识,即透明度、公正和公平、非恶意、责任和隐私等。然而,在过去的两年多里,许多关于人工智能伦理的新指南和建议已经发布,使得Jobin的论文已经过时,因为许多重要文件没有被包括在内。例如,2021年11月24日,联合国教科文组织通过了《人工智能伦理建议书》,这是有史以来第一个关于人工智能伦理的全球协议[79]。为了更新和丰富对人工智能伦理准则和原则的调研,在论文[12]的基础上,我们收集了许多新发布的人工智能伦理指南或准则文件。最后,共收集了146个人工智能伦理指南文件。

2015年至2021年每年发布的指南数量如图8所示。可以看出,大部分指南是在最近五年发布的,即2016年至2020年。2018年发布的指南数量最多,有53篇,占总数的36.3%。此外,不同类型的

图8 2015年至2021年每年发布的人工智能伦理相关文件数量.

图9 不同类型的发行人发布的指南所占的百分比.

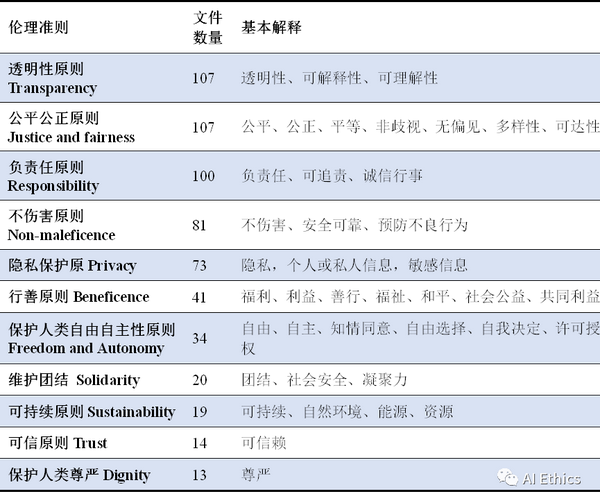

4.2人工智能伦理原则

在文献[12]基础上,我们从所收集的146个AI伦理指南文件中凝练提取出下表2中的关键伦理准则。表2给出了各个关键伦理准则的基本释义及其在所调查的146个文件中提及各个准则的文件数量。

表2 从146个AI伦理指南文件中凝练出的关键伦理准则.

5. 人工智能伦理问题的解决路径

本节回顾解决或缓解人工智能伦理问题的路径。由于人工智能伦

图10 人工智能伦理问题的解决路径.

5.1伦理方法

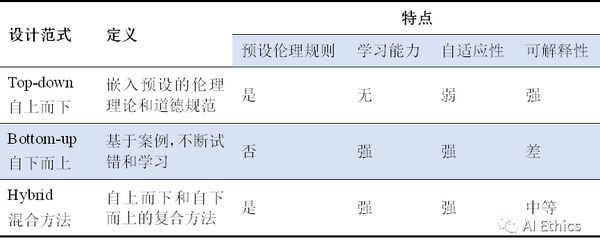

伦理手段致力于开发伦理AI系统或智能体,通过在AI中嵌入伦理道德,使它们能够根据伦理理论[87]进行推理和决策。现有的在人工智能中嵌入伦理的方法或途径可以分为三种主要类型:自上而下的方法、自下而上的方法和混合方法[101]。

表3 三种人工智能伦理设计范式及其特点比较(摘自文献[101]).

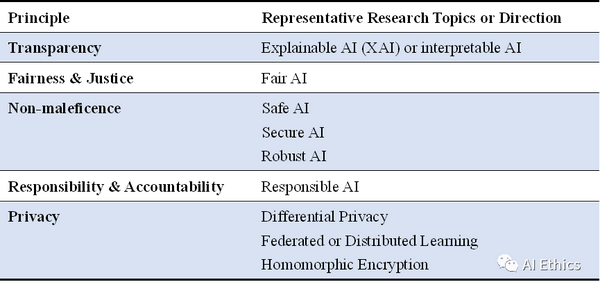

5.2技术方法

技术手段旨在开发新技术(尤其是机器学习技术),以消除或减轻当前AI的缺点,规避相应的伦理风险。例如,对可解释机器学习的研究旨在开发新的方法来解释机器学习算法的原理和工作机制,以满足透明或可解释性原则。公平机器学习研究使机器学习能够做出公平决策或预测的技术,即减少机器学习的偏见或歧视。近年来,人工智能学界为解决人工智能伦理问题做出了积极的努力。例如,ACM自 2018 年以来举办了年度ACM FAccT会议,AAAI和ACM自2018年起开始举办AAAI/ACM Conference on Artificial Intelligence, Ethics, and Society (AIES),第31届国际人工智能联合会议和第23届欧洲人工智能会议(IJCAI-ECAI 2022)提供关于“AI for good”的special track。

据我们所知,现有技术方法方面的工作主要集中在几个重大和关键的问题和原则上(即透明度、公平正义、非恶意、责任和问责、隐私),其他问题和原则很少涉及。因此,我们仅对涉及上述五项关键伦理原则的技术方法进行简要总结,表4列出了一些具有代表性的研究方向。

表4 解决五项关键原则的技术方法总结.

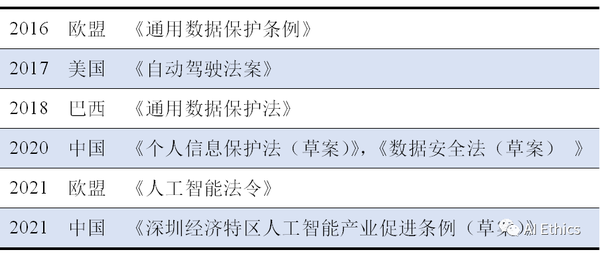

5.3法律方法

法律手段旨在通过立法来规范或管理人工智能的研究、部署、应用和其他方面来避免先前讨论的伦理问题。随着人工智能技术的应用越来越广泛,以及人工智能应用中出现的伦理问题和风险,政府和组织已经制定了许多法律法规来规范人工智能的开发和应用。法律方法已成为解决人工智能伦理问题的一种手段。在下文中,我们列出了过去几年提出的与人工智能伦理相关的几项法律法规。

表5 人工智能伦理相关的法律法规.

6. 人工智能伦理的评估方法

人工智能伦理学科的目标是设计满足伦理要求的人工智能,使其行为符合伦理或遵守伦理道德原则和规则。如何评估人工智能的伦理或道德能力是很重要和必要的,因为设计的人工智能系统需要在部署之前测试或评估是否符合伦理要求。然而,这方面在现有文献中经常被忽视。本节回顾了评估人工智能伦理的三种方法:测试、验证和标准。

6.1测试

测试是用于评估人工智能系统伦理道德能力的典型方法。通常,在测试系统时,需要将系统的输出与基本事实或预期输出进行比较[100]。针对人工智能伦理的测试,研究人员提出了道德图灵测试(Moral Turing Test, MTT)[144]以及专家/非专家测试(Experts / Nonexperts Test)。

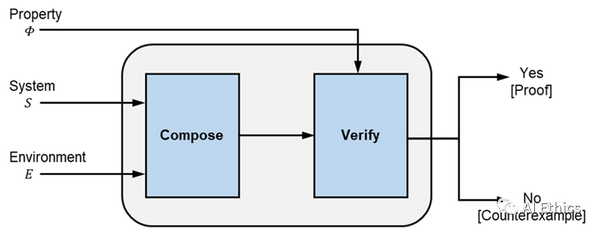

6.2验证

评估AI伦理的另一类方法包括证明AI系统根据某些已知伦理规范正确运行。文献[147]讨论了这种方法,一个典型的形式验证过程如图10所示。

图10 形式化验证过程(此图是根据文献[147]重新创建的).

6.3标准

已经提出了许多行业标准来指导人工智能的开发和应用,以及评估或评估人工智能产品。本小节介绍了一些与人工智能相关的标准:

2014年,澳大利亚计算机协会(Australian Computer Society, ACS)制定了ASC专业行为准则(ASC Professional Code of Conduct),供所有信息通信技术(ICT)专业人士遵循。

2018年,ACM更新了ACM道德和职业行为准则(ACM Code of Ethics and Professional Conduct),以应对自1992年以来计算行业的变化[149]。

IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems [150]项目批准了IEEE P7000™标准系列[151]正在开发中。

由ISO和IEC组成的联合委员会ISO/IEC JTC 1/SC 42 [152],正在负责AI领域的标准化工作,致力于制定一系列标准,这其中也涉及了AI伦理问题。

了许多标准,但标准(或原则)与实践之间的差距仍然很大。目前,只有一些大型企业,如IBM[153]和微软[154]等,已经实施了自己的行业标准、框架和指南来建立人工智能文化。但对于资源较少的小企业来说,实践与原则之间的差距仍然很大。因此,仍然需要许多努力。一方面,要提出完善的标准;另一方面,要大力践行标准。

结束语

人工智能伦理是一个复杂、具有挑战性的跨学科领域,试图解决人工智能中的伦理问题并设计能够以合乎伦理道德的人工智能系统是一项棘手而复杂的任务。然而,人工智能能否在我们未来的社会中发挥越来越重要的作用,很大程度上取决于人工智能伦理的研究成功与否。人工智能伦理的研究和实践需要人工智能科学家、工程师、哲学家、用户和政府决策者的共同努力。我们希望这篇文章可以作为对人工智能伦理感兴趣的人员的起点,为他们提供足够的背景和鸟瞰图,以便他们进行进一步的探索和研究。

参考文献

[1] M. Haenlein and A. Kaplan, “A Brief History of Artificial Intelligence: On the Past, Present, and Future of Artificial Intelligence,” California Management Review, vol. 61, no. 4, pp. 5–14, 2019.

[2] R. Vinuesa et al., “The role of artificial intelligence in achieving the Sustainable Development Goals,” Nature communications, vol. 11, no. 1, p. 233, 2020.

[3] Gartner, Chatbots Will Appeal To Modern Workers. [Online]. Available:https://www.gartner.com/smarterwithgartner/chatbots-will-appeal-to-modern-workers(accessed: Feb. 10, 2022).

[4] Haleem, M. Javaid, R. P. Singh, and R. Suman, “Telemedicine for healthcare: Capabilities, features, barriers, and applications,” Sensors international, vol. 2, p. 100117, 2021.

[5] Alice Morby, Tesla driver killed in first fatal crash using Autopilot. [Online]. Available: https://www.dezeen.com/2016/07/01/tesla-driver-killed-car-crash-news-driverless-car-autopilot/(accessed: Feb. 10, 2022).

[6] Anonymous and S. McGregor, “Incident Number 6,” AI Incident Database, 2016. [Online]. Available:https://incidentdatabase.ai/cite/6

[7] R. V. Yampolskiy, “Predicting future AI failures from historic examples,” Foresight vol. 21, no. 1, pp. 138–152, 2019.

[8] Catherine Stupp, Fraudsters Used AI to Mimic CEO’s Voice in Unusual Cybercrime Case: Scams using artificial intelligence are a new challenge for companies. [Online]. Available:https://www.wsj.com/articles/fraudsters-use-ai-to-mimic-ceos-voice-in-unusual-cybercrime-case-11567157402 (accessed: Feb. 10, 2022).

[9] Allen, W. Wallach, and I. Smit, “Why Machine Ethics?,” IEEE Intell. Syst., vol. 21, no. 4, pp. 12–17, 2006.

[10] M. Anderson and S. L. Anderson, “Machine Ethics: Creating an Ethical Intelligent Agent,” AI Mag, vol. 28, no. 4, pp. 15–26, 2007.

[11] K. Siau and W. Wang, “Artificial Intelligence (AI) Ethics,” Journal of Database Management, vol. 31, no. 2, pp. 74–87, 2020.

[12] Jobin, M. Ienca, and E. Vayena, “The global landscape of AI ethics guidelines,” Nat Mach Intell, vol. 1, no. 9, pp. 389–399, 2019.

[13] M. Ryan and B. C. Stahl, “Artificial intelligence ethics guidelines for developers and users: clarifying their content and normative implications,” JICES, vol. 19, no. 1, pp. 61–86, 2021.

[14] N. Mehrabi, F. Morstatter, N. Saxena, K. Lerman, and A. Galstyan, “A Survey on Bias and Fairness in Machine Learning,” ACM Comput. Surv., vol. 54, no. 6, pp. 1–35, 2021.

[15] Javier García, Fern, and o Fernández, “A Comprehensive Survey on Safe Reinforcement Learning,” Journal of Machine Learning Research, vol. 16, no. 42, pp. 1437–1480, 2015.

[16] V. Mothukuri, R. M. Parizi, S. Pouriyeh, Y. Huang, A. Dehghantanha, and G. Srivastava, “A survey on security and privacy of federated learning,” Future Generation Computer Systems, vol. 115, pp. 619–640, 2021.

[17] Liu et al., “Privacy and Security Issues in Deep Learning: A Survey,” IEEE Access, vol. 9, pp. 4566–4593, 2021.

[18] Barredo Arrieta et al., “Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI,” Information Fusion, vol. 58, pp. 82–115, 2020.

[19] Y. Zhang, M. Wu, G. Y. Tian, G. Zhang, and J. Lu, “Ethics and privacy of artificial intelligence: Understandings from bibliometrics,” Knowledge-Based Systems, vol. 222, p. 106994, 2021.

[20] D. Castelvecchi, “Can we open the black box of AI?,” Nature, vol. 538, no. 7623, pp. 20–23, 2016.

[21] S. Dilmaghani, M. R. Brust, G. Danoy, N. Cassagnes, J. Pecero, and P. Bouvry, “Privacy and Security of Big Data in AI Systems: A Research and Standards Perspective,” in 2019 IEEE International Conference on Big Data, Los Angeles, CA, USA, Dec. 2019, pp. 5737–5743.

[22] J. P. Sullins, “When Is a Robot a Moral Agent?,” in Machine Ethics, M. Anderson and S. L. Anderson, Eds., Cambridge: Cambridge University Press, 2011, pp. 151–161.

[23] J. Timmermans, B. C. Stahl, V. Ikonen, and E. Bozdag, “The Ethics of Cloud Computing: A Conceptual Review,” in 2010 IEEE Second International Conference on Cloud Computing Technology and Science, Indianapolis, IN, USA, 2010, pp. 614–620.

[24] W. Wang and K. Siau, “Ethical and moral issues with AI: a case study on healthcare robots,” in 24th Americas Conference on Information Systems, New Orleans, LA, USA, Aug.2018, p. 2019.

[25] Bantekas and L. Oette, International Human Rights Law and Practice. Cambridge United Kingdom, New York NY: Cambridge University Press, 2018.

[26] R. Rodrigues, “Legal and human rights issues of AI: Gaps, challenges and vulnerabilities,” Journal of Responsible Technology, vol. 4, p. 100005, 2020.

[27] W. Wang and K. Siau, “Artificial Intelligence, Machine Learning, Automation, Robotics, Future of Work and Future of Humanity: A Review and Research Agenda,” Journal of Database Management, vol. 30, no. 1, pp. 61–79, 2019.

[28] W. Wang and K. Siau, “Industry 4.0: Ethical and Moral Predicaments,” Cutter Business Technology Journal, vol. 32, no. 6, pp. 36–45, 2019.

[29] S. M. Liao, Ed., Ethics of Artificial Intelligence. New York NY United States of America: Oxford University Press, 2020.

[30] A. Adadi, “A survey on data‐efficient algorithms in big data era,” J Big Data, vol. 8, no. 1, pp. 1-54, 2021.

[31] R. S. Geiger et al., “Garbage In, Garbage Out? Do Machine Learning Application Papers in Social Computing Report Where Human-Labeled Training Data Comes From?,” in Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, Barcelona Spain, Jan. 2020, pp. 325–336.

[32] W. M. P. van der Aalst, V. Rubin, H. M. W. Verbeek, B. F. van Dongen, E. Kindler, and C. W. Günther, “Process mining: a two-step approach to balance between underfitting and overfitting,” Softw Syst Model, vol. 9, no. 1, pp. 87–111, 2010.

[33] Z. C. Lipton, “The Mythos of Model Interpretability,” Queue, vol. 16, no. 3, pp. 31–57, 2018.

[34] Y. Wang and M. Kosinski, “Deep neural networks are more accurate than humans at detecting sexual orientation from facial images,” Journal of personality and social psychology, vol. 114, no. 2, pp. 246–257, 2018.

[35] D. Guera and E. J. Delp, “Deepfake Video Detection Using Recurrent Neural Networks,” in Proceedings of 2018 IEEE International Conference on Advanced Video and Signal-based Surveillance, Auckland, New Zealand, Nov. 2018, pp. 1–6.

[36] C. B. Frey and M. A. Osborne, “The future of employment: How susceptible are jobs to computerisation?,” Technological Forecasting and Social Change, vol. 114, pp. 254–280, 2017.

[37] R. Maines, “Love + Sex With Robots: The Evolution of Human-Robot Relationships (Levy, D.; 2007) [Book Review],” in IEEE Technology and Society Magazine, vol. 27, no. 4, pp. 10-12, Winter 2008.

[38] National AI Standardization General, “Artificial Intelligence Ethical Risk Analysis Report”, 2019. [Online]. Available:http://www.cesi.cn/201904/5036.html (accessed: April. 19, 2022).

[39] A. Hannun, C. Guo, and L. van der Maaten, “Measuring data leakage in machine-learning models with Fisher information,” in Proceedings of the Thirty-Seventh Conference on Uncertainty in Artificial Intelligence, 2021, pp. 760–770.

[40] A. Salem, M. Backes, and Y. Zhang, “Get a Model! Model Hijacking Attack Against Machine Learning Models,” Nov. 2021. [Online]. Available:https://arxiv.org/pdf/2111.04394

[41] A. Pereira and C. Thomas, “Challenges of Machine Learning Applied to Safety-Critical Cyber-Physical Systems,” MAKE, vol. 2, no. 4, pp. 579–602, 2020.

[42] J. A. McDermid, Y. Jia, Z. Porter, and I. Habli, “Artificial intelligence explainability: the technical and ethical dimensions,” Philosophical transactions. Series A, Mathematical, physical, and engineering sciences, vol. 379, no. 2207, p. 20200363, 2021.

[43] J.-F. Bonnefon, A. Shariff, and I. Rahwan, “The social dilemma of autonomous vehicles,” Science, vol. 352, no. 6293, pp. 1573–1576, 2016.

[44] B. C. Stahl and D. Wright, “Ethics and Privacy in AI and Big Data: Implementing Responsible Research and Innovation,” IEEE Secur. Privacy, vol. 16, no. 3, pp. 26–33, 2018.

[45] S. Ribaric, A. Ariyaeeinia, and N. Pavesic, “De-identification for privacy protection in multimedia content: A survey,” Signal Processing: Image Communication, vol. 47, pp. 131–151, 2016.

[46] A. Julia, L. Jeff, M. Surya, and K. Lauren, Machine Bias: There’s software used across the country to predict future criminals. And it’s biased against blacks. [Online]. Available:https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing(accessed: April. 19, 2022).

[47] Jeffrey Dastin, Amazon scraps secret AI recruiting tool that showed bias against women. [Online]. Available:https://www.reuters.com/article/us-amazon-com-jobs-automation-insight/amazon-scraps-secret-ai-recruiting-tool-that-showed-bias-against-women-idUSKCN1MK08G(accessed: April. 19, 2022).

[48] D. Castelvecchi, “AI pioneer: The dangers of abuse are very real,” Nature, 2019, doi: 10.1038/d41586-019-00505-2.

[49] K. Hristov, Artificial Intelligence and the Copyright Dilemma. IDEA: The IP Law Review, vol. 57, no. 3, 2017. [Online]. Available:https://ssrn.com/abstract=2976428.

[50] C. Bartneck, C. Lütge, A. Wagner, and S. Welsh, “Responsibility and Liability in the Case of AI Systems,” in SpringerBriefs in Ethics, An Introduction to Ethics in Robotics and AI, C. Bartneck, C. Lütge, A. Wagner, and S. Welsh, Eds., Cham: Springer International Publishing, 2021, pp. 39–44.

[51] E. Bird, J. Fox-Skelly, N. Jenner, R. Larbey, E. Weitkamp, and A. Winfield, “The ethics of artificial intelligence: Issues and initiatives,” European Parliamentary Research Service, Brussels. [Online]. Available:https://www.europarl.europa.eu/thinktank/en/document/EPRS_STU(2020)634452(accessed: April. 19, 2022).

[52] C. Lutz, “Digital inequalities in the age of artificial intelligence and big data,” Human Behav and Emerg Tech, vol. 1, no. 2, pp. 141–148, 2019.

[53] L. Manikonda, A. Deotale, and S. Kambhampati, “What’s up with Privacy? User Preferences and Privacy Concerns in Intelligent Personal Assistants,” in Proceedings of the 2018 AAAI/ACM Conference on AI, Ethics, and Society, New Orleans LA USA, Dec. 2018, pp. 229–235.

[54] D. Roselli, J. Matthews, and N. Talagala, “Managing Bias in AI,” in Companion Proceedings of The 2019 World Wide Web Conference, San Francisco USA, 2019, pp. 539–544.

[55] Y. Gorodnichenko, T. Pham, and O. Talavera, “Social media, sentiment and public opinions: Evidence from #Brexit and #USElection,” European Economic Review, vol. 136, p. 103772, Jul. 2021.

[56] N. Thurman, “Making ‘The Daily Me’: Technology, economics and habit in the mainstream assimilation of personalized news,” Journalism, vol. 12, no. 4, pp. 395–415, 2011.

[57] J. Donath, “Ethical Issues in Our Relationship with Artificial Entities,” in The Oxford handbook of ethics of AI, M. D. Dubber, F. Pasquale, and S. Das, Eds., Oxford: Oxford University Press, 2020, pp. 51–73.

[58] E. Magrani, “New perspectives on ethics and the laws of artificial intelligence,” Internet Policy Review, vol. 8, no. 3, 2019.

[59] M. P. Wellman and U. Rajan, “Ethical Issues for Autonomous Trading Agents,” Minds & Machines, vol. 27, no. 4, pp. 609–624, 2017.

[60] U. Pagallo, “The impact of AI on criminal law, and its two fold procedures,” in Research handbook on the law of artificial intelligence, W. Barfield and U. Pagallo, Eds., Cheltenham UK: Edward Elgar Publishing, 2018, pp. 385–409.

[61] Eugenia Dacoronia, “Tort Law and New Technologies,” in Legal challenges in the new digital age, A. M. López Rodríguez, M. D. Green, and M. Lubomira Kubica, Eds., Leiden The Netherlands: Koninklijke Brill NV, 2021, pp. 3–12.

[62] J. Khakurel, B. Penzenstadler, J. Porras, A. Knutas, and W. Zhang, “The Rise of Artificial Intelligence under the Lens of Sustainability,” Technologies, vol. 6, no. 4, p. 100, 2018.

[63] S. Herat, “Sustainable Management of Electronic Waste (e-Waste),” Clean Soil Air Water, vol. 35, no. 4, pp. 305–310, 2007.

[64] E. Strubell, A. Ganesh, and A. McCallum, “Energy and Policy Considerations for Deep Learning in NLP,” in Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, Jun. 2019, pp. 3645–3650.

[65] V. Dignum, “Ethics in artificial intelligence: introduction to the special issue,” Ethics Inf Technol, vol. 20, no. 1, pp. 1–3, 2018.

[66] S. Corbett-Davies, E. Pierson, A. Feller, S. Goel, and A. Huq, “Algorithmic Decision Making and the Cost of Fairness,” in Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax NS Canada, 2017, pp. 797–806.

[67] R. Caplan, J. Donovan, L. Hanson, and J. Matthews, “Algorithmic accountability: A primer,” Data & Society, vol. 18, pp. 1–13, 2018.

[68] R. V. Yampolskiy, “On Controllability of AI,” Jul. 2020. [Online]. Available:https://arxiv.org/pdf/2008.04071

[69] B. C. Stahl, J. Timmermans, and C. Flick, “Ethics of Emerging Information and Communication Technologies,” Science and Public Policy,vol. 44, no. 3, pp. 369-381, 2017.

[70] L. Vesnic-Alujevic, S. Nascimento, and A. Pólvora, “Societal and ethical impacts of artificial intelligence: Critical notes on European policy frameworks,” Telecommunications Policy, vol. 44, no. 6, p. 101961, 2020.

[71] U. G. Assembly and others, “Universal declaration of human rights,” UN General Assembly, vol. 302, no. 2, pp. 14–25, 1948.

[72] S. Russell, S. Hauert, R. Altman, and M. Veloso, “Robotics: Ethics of artificial intelligence,” Nature, vol. 521, no. 7553, pp. 415–418, 2015.

[73] A. Chouldechova, “Fair Prediction with Disparate Impact: A Study of Bias in Recidivism Prediction Instruments,” Big data, vol. 5, no. 2, pp. 153–163, 2017.

[74] J. van Dijck, “Datafication, dataism and dataveillance: Big Data between scientific paradigm and ideology,” Surveillance & society, vol. 12, no. 2, pp. 197–208, 2014.

[75] E. d. S. Nascimento, I. Ahmed, E. Oliveira, M. P. Palheta, I. Steinmacher, and T. Conte, “Understanding Development Process of Machine Learning Systems: Challenges and Solutions,” in 2019 ACM/IEEE International Symposium on Empirical Software Engineering and Measurement (ESEM 2019): Porto de Galinhas, Recife, Brazil, 19-20 September 2019, Porto de Galinhas, Recife, Brazil, 2019, pp. 1–6.

[76] K. A. Crockett, L. Gerber, A. Latham, and E. Colyer, “Building Trustworthy AI Solutions: A Case for Practical Solutions for Small Businesses,” IEEE Trans. Artif. Intell., p. 1, 2021, doi: 10.1109/TAI.2021.3137091.

[77] D. Leslie, “Understanding artificial intelligence ethics and safety: A guide for the responsible design and implementation of AI systems in the public sector,” 2019. [Online]. Available:https://www.turing.ac.uk/research/publications/understanding-artificial-intelligence-ethics-and-safety (accessed: April. 19, 2022).

[78] B. Buruk, P. E. Ekmekci, and B. Arda, “A critical perspective on guidelines for responsible and trustworthy artificial intelligence,” Medicine, health care, and philosophy, vol. 23, no. 3, pp. 387–399, 2020.

[79] UNESCO, “Recommendation on the ethics of artificial intelligence”. [Online]. Available:https://en.unesco.org/artificial-intelligence/ethics(accessed: Feb. 15 2022).

[80] B. C. Stahl, Ed., Artificial intelligence for a better future: An ecosystem perspective on the ethics of AI and emerging digital technologies. Cham, Switzerland: Springer, 2021.

[81] P. D. Motloba, “Non-maleficence – a disremembered moral obligation,” South African Dental Journal, vol. 74, no. 1, 2019.

[82] L. Floridi and J. Cowls, “A Unified Framework of Five Principles for AI in Society,” in Philosophical Studies Series, vol. 144, Ethics, Governance, and Policies in Artificial Intelligence, L. Floridi, Ed., Cham: Springer International Publishing, 2021, pp. 5–17.

[83] S. Jain, M. Luthra, S. Sharma, and M. Fatima, “Trustworthiness of Artificial Intelligence,” in 2020 6th International Conference on Advanced Computing and Communication Systems, Coimbatore, India, Mar. 2020, pp. 907–912.

[84] L. Floridi et al., “AI4People-An Ethical Framework for a Good AI Society: Opportunities, Risks, Principles, and Recommendations,” Minds & Machines, vol. 28, no. 4, pp. 689–707, 2018.

[85] R. Nishant, M. Kennedy, and J. Corbett, “Artificial intelligence for sustainability: Challenges, opportunities, and a research agenda,” International Journal of Information Management, vol. 53, p. 102104, 2020.

[86] C. S. Wickramasinghe, D. L. Marino, J. Grandio, and M. Manic, “Trustworthy AI Development Guidelines for Human System Interaction,” in Proceedings of 2020 13th International Conference on Human System Interaction, Tokyo, Japan, 2020, pp. 130–136.

[87] V. Dignum, “Can AI Systems Be Ethical?,” in Artificial Intelligence: Foundations, Theory, and Algorithms, Responsible Artificial Intelligence, V. Dignum, Ed., Cham: Springer International Publishing, 2019, pp. 71–92.

[88] S. L. Anderson and M. Anderson, “AI and ethics,” AI Ethics, vol. 1, no. 1, pp. 27–31, 2021.

[89] V. Dignum, “Ethical Decision-Making,” in Artificial Intelligence: Foundations, Theory, and Algorithms, Responsible Artificial Intelligence, V. Dignum, Ed., Cham: Springer International Publishing, 2019, pp. 35–46.

[90] G. Sayre-McCord, “Metaethics,” in The Stanford Encyclopedia of Philosophy, Edward N. Zalta, Ed., 2014th ed.: Metaphysics Research Lab, Stanford University, 2014. [Online]. Available:https://plato.stanford.edu/entries/metaethics/#:~:text=Metaethics%20is%20the%20attempt%20to,matter%20of%20taste%20than%20truth%3F.

[91] Ethics | Internet Encyclopedia of Philosophy. [Online]. Available: https://iep.utm.edu/ethics/#SH2c (accessed: Aug. 2 2021).

[92] R. Hursthouse and G. Pettigrove, “Virtue Ethics,” in The Stanford Encyclopedia of Philosophy, Edward N. Zalta, Ed., 2018th ed.: Metaphysics Research Lab, Stanford University, 2018. [Online]. Available:https://plato.stanford.edu/entries/ethics-virtue/.

[93] N. Cointe, G. Bonnet, and O. Boissier, “Ethical Judgment of Agents’ Behaviors in Multi-Agent Systems,” in Proceedings of the 2016 International Conference on Autonomous Agents & Multiagent Systems, 2016, pp. 1106–1114.

[94] H. Yu, Z. Shen, C. Miao, C. Leung, V. R. Lesser, and Q. Yang, “Building Ethics into Artificial Intelligence,” in Proceedings of the 27th International Joint Conference on Artificial Intelligence, 2018, pp. 5527–5533.

[95] H. J. Curzer, Aristotle and the virtues. Oxford, New York: Oxford University Press, 2012.

[96] L. Alexander and M. Moore, “Deontological Ethics,” in The Stanford Encyclopedia of Philosophy, Edward N. Zalta, Ed., 2020th ed.: Metaphysics Research Lab, Stanford University, 2020. [Online]. Available:https://plato.stanford.edu/entries/ethics-deontological/.

[97] W. Sinnott-Armstrong, “Consequentialism,” in The Stanford Encyclopedia of Philosophy, Edward N. Zalta, Ed., 2019th ed.: Metaphysics Research Lab, Stanford University, 2019. [Online]. Available:https://plato.stanford.edu/entries/consequentialism/.

[98] D. O. Brink, “Some Forms and Limits of Consequentialism,” in Oxford handbooks in philosophy, The Oxford handbook of ethical theory, D. Copp, Ed., New York: Oxford University Press, 2006, pp. 380–423.

[99] H. ten Have, Ed., Encyclopedia of global bioethics. Switzerland: Springer International Publishing AG, 2016.

[100] S. Tolmeijer, M. Kneer, C. Sarasua, M. Christen, and A. Bernstein, “Implementations in Machine Ethics: A Survey,” ACM Comput. Surv., vol. 53, no. 6, pp. 1–38, 2021.

[101] C. Allen, I. Smit, and W. Wallach, “Artificial Morality: Top-down, Bottom-up, and Hybrid Approaches,” Ethics Inf Technol, vol. 7, no. 3, pp. 149–155, 2005.

[102] W. Wallach and C. Allen, “TOP‐DOWN MORALITY,” in Moral Machines, W. Wallach and C. Allen, Eds.: Oxford University Press, 2009, pp. 83–98.

[103] I. Asimov, “Runaround,” Astounding science fiction, vol. 29, no. 1, pp. 94–103, 1942.

[104] J.-G. Ganascia, “Ethical system formalization using non-monotonic ogics,” in Proceedings of the Annual Meeting of the Cognitive Science Society, 2007, pp. 1013–1018.

[105] K. Arkoudas, S. Bringsjord, and P. Bello, “Toward ethical robots via mechanized deontic logic,” in AAAI fall symposium on machine ethics, 2005, pp. 17–23.

[106] S. Bringsjord and J. Taylor, “Introducing divine-command robot ethics,” Robot ethics: the ethical and social implication of robotics, pp. 85–108, 2012.

[107] N. S. Govindarajulu and S. Bringsjord, “On Automating the Doctrine of Double Effect,” in IJCAI, Melbourne, Australia, op. 2017, pp. 4722–4730.

[108] Fiona Berreby, Gauvain Bourgne, and Jean-Gabriel Ganascia, “A Declarative Modular Framework for Representing and Applying Ethical Principles,” in Proceedings of the 16th Conference on Autonomous Agents and MultiAgent Systems, São Paulo, Brazil, May 2017, pp. 96–104.

[109] V. Bonnemains, C. Saurel, and C. Tessier, “Embedded ethics: some echnical and ethical challenges,” Ethics Inf Technol, vol. 20, no. 1, pp. 41–58, 2018.

[110] G. S. Reed, M. D. Petty, N. J. Jones, A. W. Morris, J. P. Ballenger, and H. S. Delugach, “A principles-based model of ethical considerations in military decision making,” Journal of Defense Modeling & Simulation, vol. 13, no. 2, pp. 195–211, 2016.

[111] L. Dennis, M. Fisher, M. Slavkovik, and M. Webster, “Formal verification of ethical choices in autonomous systems,” Robotics and Autonomous Systems, vol. 77, pp. 1–14, 2016.

[112] A. R. Honarvar and N. Ghasem-Aghaee, “Casuist BDI-Agent: A New Extended BDI Architecture with the Capability of Ethical Reasoning,” in Proceedings of International conference on artificial intelligence and computational intelligence, Shanghai, China, Nov. 2009, pp. 86–95.

[113] Anand S. Rao and Michael P. Georgeff, “BDI Agents: From Theory to Practice,” in Proceedings of the First International Conference on Multiagent Systems, San Francisco, CA, USA, 1995, pp. 312–319.

[114] Stuart Armstrong, “Motivated Value Selection for Artificial Agents,” in Artificial Intelligence and Ethics, Papers from the 2015 AAAI Workshop, Austin, Texas, USA, Jan. 2015.

[115] U. Furbach, C. Schon, and F. Stolzenburg, “Automated Reasoning in Deontic Logic,” in Lecture notes in artificial intelligence, vol. 8875, Multi-disciplinary trends in artificial intelligence: 8th International Workshop, MIWAI 2014, Bangalore, India, December 8-10, 2014. Proceedings, M. N. Murty, X. He, R. R. Chillarige, and P. Weng, Eds., 1st ed., New York: Springer, 2014, pp. 57–68.

[116] D. Howard and I. Muntean, “Artificial Moral Cognition: Moral Functionalism and Autonomous Moral Agency,” in Philosophical Studies Series, Philosophy and Computing, T. M. Powers, Ed., Cham: Springer International Publishing, 2017, pp. 121–159.

[117] Yueh-Hua Wu and Shou-De Lin, “A Low-Cost Ethics Shaping Approach for Designing Reinforcement Learning Agents,” in Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, Louisiana, USA, Feb. 2018, pp. 1687–1694.

[118] Ritesh Noothigattu et al., “A Voting-Based System for Ethical Decision Making,” in Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, Louisiana, USA, Feb. 2018, pp. 1587–1594.

[119] M. Guarini, “Particularism and the Classification and Reclassification of Moral Cases,” IEEE Intell. Syst., vol. 21, no. 4, pp. 22–28, 2006.

[120] Michael Anderson and Susan Leigh Anderson, “GenEth: A General Ethical Dilemma Analyzer,” in Proceedings of the Twenty-Eighth AAAI Conference on Artificial Intelligence, Québec City, Québec, Canada, July 2014, pp. 253–261.

[121] M. Azad-Manjiri, “A New Architecture for Making Moral Agents Based on C4.5 Decision Tree Algorithm,” IJITCS, vol. 6, no. 5, pp. 50–57, 2014.

[122] L. Yilmaz, A. Franco-Watkins, and T. S. Kroecker, “Computational models of ethical decision-making: A coherence-driven reflective equilibrium model,” Cognitive Systems Research, vol. 46, pp. 61–74, 2017.

[123] T. A. Han, A. Saptawijaya, and L. Moniz Pereira, “Moral Reasoning under Uncertainty,” in Lecture Notes in Computer Science, vol. 7180, Logic for Programming, Artificial Intelligence, and Reasoning, D. Hutchison et al., Eds., Berlin, Heidelberg: Springer Berlin Heidelberg, 2012, pp. 212–227.

[124] M. Anderson, S. Anderson, and C. Armen, “Towards machine ethics Implementing two action-based ethical theories,” in Proceedings of the AAAI 2005 fall symposium on machine ethics, 2005, pp. 1–7.

[125] G. Gigerenzer, “Moral satisficing: rethinking moral behavior as bounded rationality,” Topics in cognitive science, vol. 2, no. 3, pp. 528–554, 2010.

[126] J. Skorin-Kapov, “Ethical Positions and Decision-Making,” in Professional and business ethics through film, J. Skorin-Kapov, Ed., New York NY: Springer Berlin Heidelberg, 2018, pp. 19–54.

[127] T.-L. Gu and L. Li, “Artificial Moral Agents and Their Design Methodology: Retrospect and Prospect,” Chinese Journal of Computers, vol. 44, pp. 632–651, 2021.

[128] C. Molnar, G. Casalicchio, and B. Bischl, “Interpretable Machine Learning – A Brief History, State-of-the-Art and Challenges,” in Communications in Computer and Information Science, ECML PKDD 2020 Workshops, Koprinska, Ed., [S.l.]: Springer International Publishing, 2020, pp. 417–431.

[129] C. Molnar, Interpretable machine learning: A guide for making Black Box Models interpretable. [Morisville, North Carolina]: [Lulu], 2019.

[130] S. Feuerriegel, M. Dolata, and G. Schwabe, “Fair AI,” Bus Inf Syst Eng, vol. 62, no. 4, pp. 379–384, 2020.

[131] S. Caton and C. Haas, “Fairness in Machine Learning: A Survey,” Oct. 2020. [Online]. Available:https://arxiv.org/pdf/2010.04053.

[132] S. E. Whang, K. H. Tae, Y. Roh, and G. Heo, “Responsible AI Challenges n End-to-end Machine Learning,” Jan. 2021. [Online]. Available:https://arxiv.org/pdf/2101.05967.

[133] D. Peters, K. Vold, D. Robinson, and R. A. Calvo, “Responsible AI—Two Frameworks for Ethical Design Practice,” IEEE Trans. Technol. Soc., vol. 1, no. 1, pp. 34–47, 2020.

[134] V. Dignum, Ed., Responsible Artificial Intelligence. Cham: Springer International Publishing, 2019.

[135] C. Dwork, “Differential Privacy: A Survey of Results,” in Lecture Notes in Computer Science, Theory and Applications of Models of Computation, M. Agrawal, D. Du, Z. Duan, and A. Li, Eds., Berlin, Heidelberg: Springer Nature, 2008, pp. 1–19.

[136] Q. Yang, Y. Liu, Y. Cheng, Y. Kang, T. Chen, and H. Yu, “Federated Learning,” Synthesis Lectures on Artificial Intelligence and Machine Learning, vol. 13, no. 3, pp. 1–207, 2019.

[137] M. Kirienko et al., “Distributed learning: a reliable privacy-preserving strategy to change multicenter collaborations using AI,” Eur J Nucl Med Mol Imaging, vol. 48, no. 12, pp. 3791-3804.2021.

[138] R. Shokri and V. Shmatikov, “Privacy-Preserving Deep Learning,” in Proceedings of the 22nd ACM SIGSAC Conference on Computer and Communications Security, Denver Colorado USA, 2015, pp. 1310–1321.

[139] C. Meurisch, B. Bayrak, and M. Mühlhäuser, “Privacy-preserving AI Services Through Data Decentralization,” in Proceedings of The Web Conference 2020, Taipei Taiwan, 2020, pp. 190–200.

[140] UR-Lex – 02016R0679-20160504 – EN – EUR-Lex. [Online]. Available:https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A02016R0679-20160504&qid=1532348683434 (accessed: Jun. 28 2021).

[141] R. E. Latta, H.R.3388 – 115th Congress (2017-2018): SELF DRIVE Act. [Online]. Available:https://www.congress.gov/bill/115th-congress/house-bill/3388 (accessed: Jun. 28 2021).

[142] 7. Lei No. 13, de 14 de Agosto de 2018. [Online]. Available:http://www.planalto.gov.br/ccivil_03/_Ato2015-2018/2018/Lei/L13709.htm (accessed: Jun. 25 2021).

[143] EUR-Lex – 52021PC0206 – EN – EUR-Lex. [Online]. Available: https://eur-lex.europa.eu/legal-content/EN/TXT/?qid=1623335154975&uri=CELEX%3A52021PC0206(accessed: Jun. 28 2021).

[144] C. Allen, G. Varner, and J. Zinser, “Prolegomena to any future artificial moral agent,” Journal of Experimental & Theoretical Artificial Intelligence, vol. 12, no. 3, pp. 251–261, 2000.

[145] A. M. TURING, “Computing Machinery and Intelligence,” Mind, LIX, no. 236, pp. 433–460, 1950.

[146] W. Wallach and C. Allen, Moral machines: Teaching robots right from wrong. Oxford, New York: Oxford University Press, 2009.

[147] S. A. Seshia, D. Sadigh, and S. S. Sastry, “Towards Verified Artificial Intelligence,” Jun. 2016. [Online]. Available:http://arxiv.org/pdf/1606.08514v4.

[148] T. Arnold and M. Scheutz, “Against the moral Turing test: accountable design and the moral reasoning of autonomous systems,” Ethics Inf Technol, vol. 18, no. 2, pp. 103–115, 2016.

[149] ACM Code of Ethics and Professional Conduct. [Online]. Available:https://www.acm.org/code-of-ethics (accessed: Jun. 25 2021).

[150] IEEE SA – The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems. [Online]. Available:https://standards.ieee.org/industry-connections/ec/autonomous-systems.html(accessed: Jun. 28 2021).

[151] Ethics In Action | Ethically Aligned Design, IEEE 7000™ Projects | IEEE Ethics In Action in A/IS – IEEE SA. [Online]. Available:https://ethicsinaction.ieee.org/p7000/(accessed: Jun. 28 2021).

[152] ISO, ISO/IEC JTC 1/SC 42 – Artificial intelligence. [Online]. Available: https://www.iso.org/committee/6794475.html (accessed: Jun. 28 2021).

[153] B. Goehring, F. Rossi, and D. Zaharchuk. “Advancing AI ethics beyond compliance: From principles to practice,” IBM Corporation, April 2020. [Online]. Available:https://www.ibm.com/thought-leadership/institute-business-value/report/ai-ethics(accessed: April. 19 2022)

[154] Responsible AI. [Online]. Available: https://www.microsoft.com/en-us/ai/responsible-ai?activetab=pivot1:primaryr6 (accessed: April. 19 2022).

[155] F. Allhoff, “Evolutionary ethics from Darwin to Moore,” History and philosophy of the life sciences, vol. 25, no. 1, pp. 51–79, 2003.

【本文仅供学术交流,不当之处,敬请指正。】

本文使用 文章同步助手 同步